- Establish close cooperation across the institution including cyber, fraud, AML, and credit risk teams

AI-enabled fraud, like many other types of fraud, intersects multiple teams within an organization including cyber, fraud, AML, and credit risk. To ensure a unified response, the organization should establish close cooperation, such as working groups with representatives from all relevant departments. This ensures knowledge is shared, while also minimizing duplication and gaps. Datasets should be integrated to provide a more complete view which better enables anomalies to be identified.

For example:

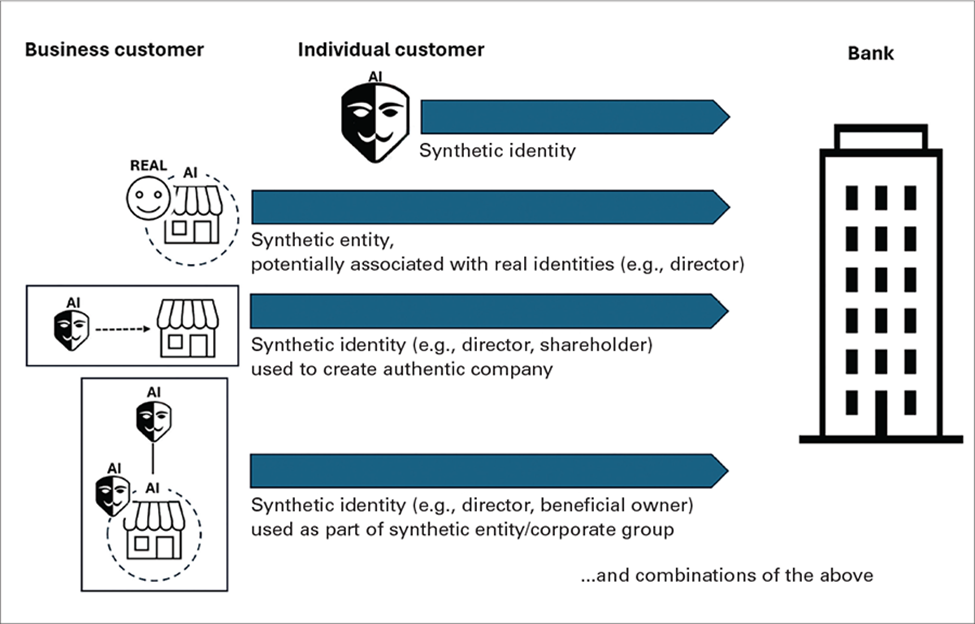

- The activity on accounts set up using synthetic identities may mimic that of authentic individuals. Even when a significant loss occurs, this may be presumed to be the result of a real person defaulting on their credit and it may be written off as a credit loss. Collaboration between the credit and fraud teams could uncover that the identity used to open the account was synthetic. This should then prompt further investigation to ensure that the same synthetic identity or personal information has not been used to open other accounts.

- Identifying and preventing fraud perpetrated using remote North Korean IT workers requires coordination between teams. These include Human Resources (HR) to implement appropriate hiring controls such as identity verification, and cyber teams to monitor remote work access and activities. (For more information on fraud using North Korean workers, see the sidebar to this article titled, “AI-enhanced national security and sanctions risks to banks: North Korean remote IT workers.”)

- Assess policies, processes, systems and controls focusing specifically on AI fraud risks

Financial institutions should be vigilant and continuously review and adapt their anti-fraud countermeasures as methodologies evolve. Sources of information include internal investigations, law enforcement and regulatory advisories, and sharing information within industry.

Policies, processes, systems, and controls should be reviewed and designed to manage AI-specific risks. For example:

- The institution’s risk assessment should include specific consideration of AI-enabled frauds. Some institutions are more susceptible to executive impersonation, whereas those processing high transaction volumes are more vulnerable to financial fraud. The institution should develop appropriate risk management measures according to its risk profile.

- The vendor evaluation process for an institution using a third-party digital identification and verification solution should assess the measures the software uses to detect AI-enhanced or generated identities and entities, to ensure they meet the institution’s standards.

- The procedure applicable in the event of credit loss should include investigation into whether this was the result of synthetic identity or entity fraud, which should prompt further investigation as described below.

Financial institutions can also consider the use of analytics and AI to enhance their anti-fraud measures — using AI as a “shield” against its use by fraudsters as a “sword”.

- Ongoing monitoring and assessment

Ongoing monitoring and assessment are key to identifying fraud, as well as preventing further fraud. Some risk management measures that can enhance identification and action on AI-specific frauds throughout the client lifecycle include:14

- Ongoing comparison of account data across the client base. Data elements (e.g. SSN or address) are often re-used to create multiple synthetic identities which are used to open further accounts. If a new account is opened and the SSN is already associated with a different, existing customer, it is an indicator that one or both identities may be synthetic. The Social Security Administration’s electronic Consent-Based Social Security Number Verification system (eCBSV) allows consent-based SSN checks that help banks identify synthetic identities.

- Shared device or contact details across multiple clients. It is uncommon for multiple legitimate customers to share a device, email address, or phone number. However, this can occur in fraud schemes because multiple synthetic identities are controlled by the same fraudster.

- Behavioral profiling and anomaly detection including typing speed, mouse movements, and session patterns. These provide dynamic individualized “signatures”, which — unlike static identifiers like passwords — are difficult to falsify or compromise.

If fraud losses occur (or there is a “near miss”) or other suspicious activity is identified, further analysis should be performed to identify the methodologies used and whether any other accounts show the same characteristics. They too may be part of a wider fraud scheme. For example, for a confirmed credit card fraud, were the repayments made from an account which has also remitted payments for other credit cards in different names?

The Federal Reserve provides valuable resources including a useful checklist on post-loss analysis: https://fedpaymentsimprovement.org/wp-content/uploads/identifying-existing-synthetics-post-loss.pdf

While institutions should use “red flags” to identify risk indicators for fraud, they should also ensure these processes are applied responsibly. For example, well-established indicators of a falsified SSN include: the SSN that does not correspond with the age of a person, the absence of a credit score, or a short address history. While these are red flags for a falsified SSN, they are also consistent with a valid SSN for a person recently arrived in the United States.

Fraud solutions should also include “negative indicators” and/or manual validation to identify whether the fraud red flags are a “false positive.” For example, in the scenario above, the person may have opened their account with a passport and visa showing their arrival date in the United States. Imprecise application of red flags without adequate human oversight adversely impacts financial inclusion, and over-reliance on automated systems may even result in litigation, regulatory penalties, and financial failure of the business.15

- Provide training for staff on AI fraud risks and indicators

Financial institutions should provide training to their staff specifically on AI fraud. To enhance relevance and retention of knowledge, training should be customized for the fraud risks faced by each group of staff, for example:

- Customer-facing staff: how to identify indicators of AI-enhanced voice or video, as well as indicators that a customer may be a victim of fraud;

- Investigations teams (e.g. AML and fraud): red flags and investigative techniques for AI-enabled synthetic identity and entity fraud;

- HR staff: indicators of North Korean IT worker impersonations and other types of hiring fraud; and

- Finance staff: how deepfakes can be used in “CEO frauds” in an attempt to induce unauthorized payments.

All staff should be trained on the actions required if they identify suspected or actual fraud.

- Support customer awareness

In addition to training staff, financial institutions should consider customer awareness campaigns which include information on how AI can be used to perpetrate fraud. This enhances the customer relationship by providing useful resources. It also reduces customer vulnerability to fraud — and the potential for financial institutions to incur losses or to be held accountable by regulators for having inadequate controls to protect their customers. (See the sidebar “Bank responsibilities for defrauded customers: Best practices.”)

For example, awareness campaigns for corporate customers could focus on CEO frauds and how their business might be targeted. For business accounts, any change to payee information should trigger an out-of-band confirmation — the same type of control used in business email compromise (BEC) prevention. Individual customers could be educated on elder fraud, romance scams, and family/medical emergency scams, among others. Customers could also be encouraged to learn the signs that their family members or friends might be targeted so that they can more effectively intervene and support.

- Report and share intelligence

Financial institutions should share information with each other where permitted by regulation, as well as reporting it to appropriate U.S. government agencies. This increases awareness of fraud methodologies as well as specific fraud schemes, enabling them to be more quickly identified and contained, as well as supporting law enforcement investigations. For example:

- Deepfakes should be reported to FinCEN, the National Security Agency (NSA) Cybersecurity Collaboration Center for Department of Defense and Defense Industrial Base Organizations, and the Federal Bureau of Investigation (FBI).

- Fraudulent identities should also be reported to credit bureaus to mitigate the risk of them being re-used in future.